[ad_1]

If the use of generative AI in the workplace didn’t seem complicated enough already, just consider what it means for big cities and other governmental agencies.

The implications of common AI problems such as algorithmic bias and attribution of intellectual property are magnified in the public sector, and further complicated by unique challenges such as the retention and production of public records.

Jim Loter, interim chief technology officer for the City of Seattle, grappled with these issues as he and his team produced the city’s first generative AI policy this spring.

Loter recently presented on the topic to the U.S. Conference of Mayors annual meeting in Columbus, Ohio. Seattle Mayor Bruce Harrell chairs the group’s Technology and Innovation Committee.

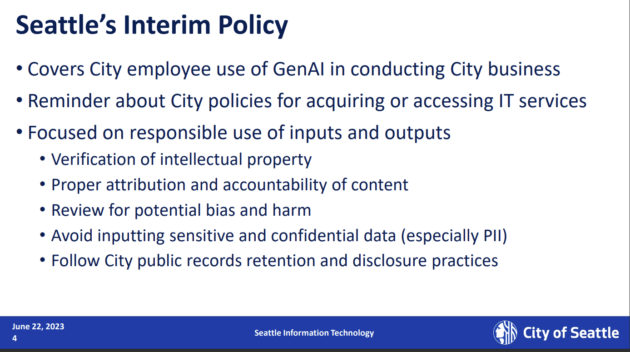

As a starting point, Seattle’s policy requires city employees to receive permission from Seattle’s information technology department before using generative AI as part of their work. The city describes this as its standard operating practice “for all new or non-standard technology.”

Public records are a key part of the consideration.

“One of the most common conversations we have with city staff who come to us and want to start using a particular software tool, is to ask them, what is your strategy for managing the records that you are producing using this tool, and for allowing your public disclosure officer to get access to them when they are requested?” Loter says on a new episode of the GeekWire Podcast.

It’s not a hypothetical consideration, he explained, noting that the city has already had a public records request for any and all uses of city employees interacting with ChatGPT.

What types of uses of AI are city employees considering? Here are a few examples Loter gave on the podcast.

- First drafts of city communications.

- Analysis of documents and reports.

- Language translation for citizens.

As described in Loter’s presentation to the U.S. Conference of Mayors, generative AI raises a number of novel risks and concerns for municipalities. This list is from his presentation.

- Lack of transparency about the source data for models

- Algorithmic transparency is also still an issue

- Accountability for intellectual property violations

- Difficult to assess veracity of output

- Bias in source data and algorithms can inform or skew outputs, reify existing prejudices

- Bad actors could “poison” data sources with misinformation, propaganda, etc.

- New threats to safety and security (mimicking voices, fake videos)

- Consolidation of AI technology ownership and control

“When we look at these generative AI tools, the trust, the community, and the transparency really aren’t there,” Loter said on the podcast. “We don’t have insight into the data layer, we don’t have insight into the foundation layer of these applications. We don’t have access to the product layer and understand how they’re being implemented. Nor is there a community, a trusted community, involved in the production or the vetting of those tools.”

He continued, “It may not introduce a new categorical risk. But it raises the level of risks that may already be there when people are sourcing content from a third party or from an outside source.”

How can cities approach generative AI responsibly? Here’s the summary from Loter’s presentation.

Require responsibility in review of generated content

- Copyright / intellectual property

- Truth / fact-check

- Attribution – tell people AI wrote this content

- Screen for bias

Recommend specific vetted uses

- Data: Identify patterns, don’t make decisions

- Writing: Produce summaries of reports/legislation, don’t write them

- Search: Query/interact with City-controlled data, not general data

- Coding: Help find bugs or suggest optimizations, don’t write software

Perform A/B tests against known-good products

- Perform A/B tests against known-good product

Advocate for transparency and explainability in AI products

- What are the data sources used to build the model?

- How are decisions made?

- How is source content selected, evaluated, and moderated?

- How is illegal activity monitored and controlled for?

- If vendor is licensing AI services, what is that agreement and how is vendor prepared to enforce standards?

On that last point, the city of Seattle is a Microsoft shop, and Loter explains that the need for safeguards is one of the issues the city is discussing with the company as it incorporates OpenAI-powered technologies into Microsoft 365 and other Microsoft products.

We also talk on the podcast about digital equity initiatives and efforts to ensure that local municipalities aren’t pre-empted from exercising authority over internet service providers to try to achieve equitable broadband access.

Listen above, or subscribe to GeekWire in Apple Podcasts, Google Podcasts, Spotify or wherever you listen.

[ad_2]

Source link