[ad_1]

Sharing our thoughts with others has always required some form of active translation, whether through audible speech, the written word, or visual arts. But what if we could skip that step entirely by allowing a passive system to translate for us? A team of neuroscientists at the University of Texas at Austin have created a noninvasive system that turns brain activity into text, effectively conveying an idea without any additional effort from the user.

The system largely relies on functional magnetic resonance imaging (fMRI) and machine learning. Thanks to fMRI’s ability to capture tiny electrical exchanges and blood oxygen fluctuations in the brain, it’s a fair substitute for more invasive methods like surgically implanted electrodes, which have been involved in similar experiments. The imaging technique captures a high-level view of the changes that occur during various cognitive activities, including language processing, allowing researchers to see what happens in the brain when certain words or patterns are heard, read, or said.

In a paper for Nature Neuroscience, lead author and UT Austin associate professor Dr. Alexander Huth shares that he and his colleagues began by training their machine learning framework on volunteers’ brain scans. Seven volunteers listened to podcast stories while an fMRI machine captured their brain activity. Afterward, the brain scans were fed into a framework that used ChatGPT and Bayesian decoding to create predictive sequences out of more than 200 million words in the podcasts. Because every brain’s processing patterns are slightly different, each volunteer had their own “neural fingerprint” with which the decoder could parse language.

Huth’s team then asked the volunteers to test out the decoder itself. In some experiments, the volunteers again listened to podcast stories while the fMRI machine captured their brain activity. In others, the volunteers were instructed to imagine their own one-minute stories. Still others captured the volunteers’ brain activity as they watched short animated clips without sound. All the while, Huth’s team’s decoder translated their brain scans into text.

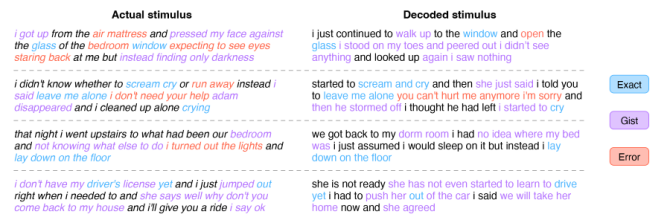

Credit: Huth et al/Nature Neuroscience/DOI 10.1038/s41593-023-01304-9

The results, while imperfect, were startlingly close to the volunteers’ stimuli. The decoder got the gist of each story; though some short phrases were mistranslated, most events and dialogue were accurate. To someone unfamiliar with the decoder, the experiments’ “before” and “after” texts might look like a story translated between two languages.

Wanting to test the idea of “mental privacy,” the neuroscientists asked volunteers to “consciously resist” the decoder at times by counting, naming different animals, or even turning their attention to their own imagined stories while listening to another stimulus. This was found to reduce the decoder’s ability to pick up on the story in question, leading the researchers to think that active mental participation might serve as a permission of sorts for the decoder to work.

So what is it for? The team believes their decoder could be useful in neuroscience research and accessibility. Not only does an fMRI-based translation system like this one help to uncover some of the brain’s biggest mysteries, but it might eventually give way to communication methods that support nonverbal people. In the meantime, they’re working on introducing new languages and updated language models to enhance the decoder’s translation abilities.

[ad_2]

Source link