[ad_1]

Benj Edwards / Ars Technica

Over the past week, the smartphone app Lensa AI has become a popular topic on social media because it can generate stylized AI avatars based on selfie headshots that users upload. It’s arguably the first time personalized latent diffusion avatar generation has reached a mass audience.

While Lensa AI has proven popular among people on social media who like to share their AI portraits, the press has widely focused on the app’s reported tendency to sexualize depictions of women when Lensa’s AI avatar feature launched.

A product of Prisma Labs, Lensa launched in 2018 as a subscription app focused on AI-powered photo editing. In late November 2022, the app grew in popularity thanks to its new “Magic Avatar” feature. Lensa reportedly utilizes the Stable Diffusion image synthesis model under the hood, and Magic Avatar appears to use a personalization training method similar to Dreambooth (whose ramifications we recently covered). All of the training takes place off-device and in the cloud.

In early December, women using the app noticed that Lensa’s Magic Avatar feature would create semi-pornographic or unintentionally sexualized images. For CNN, Zoe Sottile wrote, “One of the challenges I encountered in the app has been described by other women online. Even though all the images I uploaded were fully-clothed and mostly close-ups of my face, the app returned several images with implied or actual nudity.”

The reaction in the press grew as other women experienced similar things. For example, here’s a selection of headlines covering Lensa in various publications:

Meanwhile, the same sexualization issue didn’t appear in images of men uploaded to the Magic Avatar feature. For MIT Technology Review, Melissa Heikkilä wrote, “My avatars were cartoonishly pornified, while my male colleagues got to be astronauts, explorers, and inventors.”

This is one of the dangers of basing a product on Stable Diffusion 1.x, which can easily sexualize its output by default. The behavior comes from the large quantity of sexualized images found in its image training data set, which was scraped from the Internet. (Stable Diffusion 2.x attempted to rectify this by removing NSFW material from the training set.)

The Internet’s cultural biases toward the sexualized depiction of women online have given Lensa’s AI generator its tendencies. Speaking to TechCrunch, Prisma Labs CEO Andrey Usoltsev said, “Neither us, nor Stability AI could consciously apply any representation biases; To be more precise, the man-made unfiltered data sourced online introduced the model to the existing biases of humankind. The creators acknowledge the possibility of societal biases. So do we.”

In response to the widespread criticism, Prisma Labs has reportedly been working to prevent the accidental generation of nude images in Lensa AI.

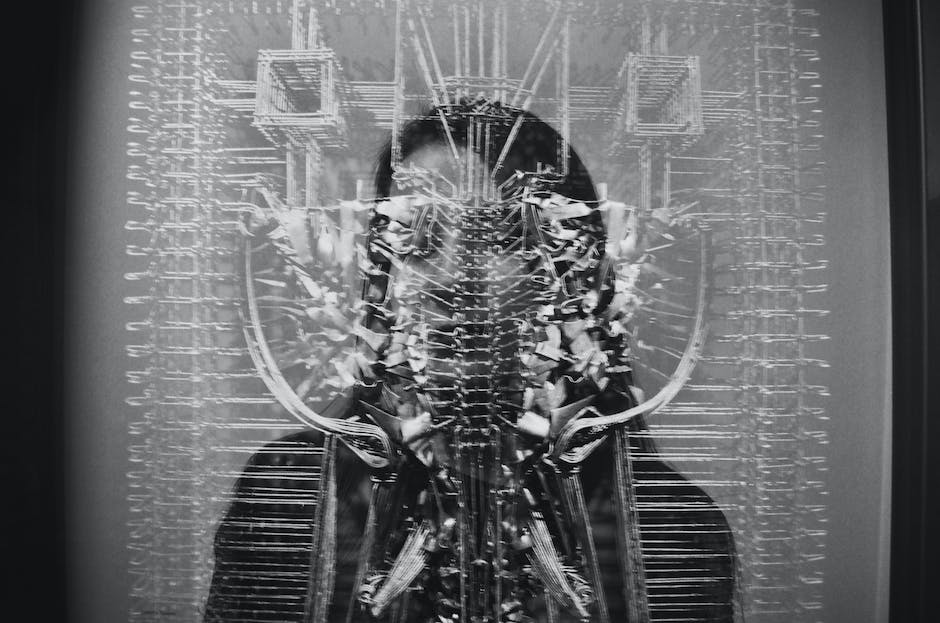

On Tuesday, we experimented with Lensa AI by uploading 15 images of a woman (and also 15 images of a man), then paid for Magic Avatar representations of each. The woman’s results we saw weren’t sexualized in any obvious way, so Prisma’s efforts to reduce the incidence of NSFW-style imagery might be working already.

-

A selection of female Magic Avatars that we generated using the Lensa AI app.

-

A selection of male Magic Avatars that we generated using the Lensa AI app.

This does not mean that Lensa AI’s tendencies to sexualize female subjects don’t exist. It’s worth noting that due to how Stable Diffusion generates images, it’s likely that different women will see different results depending on how closely their face (or input photos) resemble photos of a particular actress, celebrity, or model in the Stable Diffusion dataset. In our experience, that can heavily dictate how Stable Diffusion renders the rest of a person’s body and its context.

Using Lensa AI is easy, but it’s a product with a yearly subscription fee (currently $50 per year) and a one-time fee for training Magic Avatar images—$3.99 for 50 avatars, $5.99 for 100 avatars, or $7.99 for 200 avatars. Customers upload 10-20 selfies taken from different angles and then wait roughly 20-30 minutes to see the results. Users must confirm they are over 18 years or older to use the service.

Those technically inclined could experiment with Dreambooth instead for free, but it currently requires some knowledge of development tools to get working.

[ad_2]

Source link