[ad_1]

Meta’s Oversight Board, the Supreme Court-esque body formed as a check on Facebook and Instagram’s content moderation decisions, received nearly 1.3 million appeals to the tech giant’s decisions last year. That mammoth figure amounts to an appeal every 24 seconds, 3,537 cases a day. The vast majority of those appeals involved alleged violations of just two of Meta’s community standards: violence/incitement and hate speech.

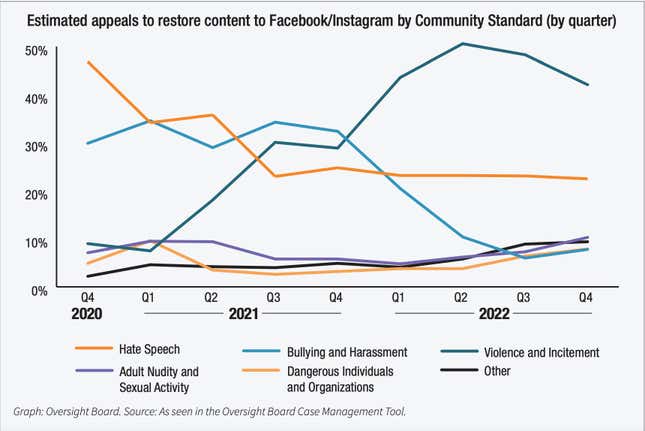

The appeal figures are part of the Oversight Board’s 2022 annual report, shared with Gizmodo Tuesday. Facebook and Instagram users appealed Meta’s decision to remove their content 1,290,942 times, which the Oversight Board said marks a 25% increase in the number of appeals over the year prior. Two-thirds of those appeals concerned alleged violence/incitement and hate speech. The chart below shows the estimated percentage of appeals the Oversight Board received, broken down by the type of violating content, since it started taking submissions in late 2020. Though hate speech appeals have dipped, appeals related to violence/incitement have skyrocketed up in recent years.

The biggest portion of those appeals (45%) came from the United States and Canada, which have some of the world’s largest Facebook and Instagram user bases. American and Canadian users have also been on Facebook and Instagram for a comparatively long time. The vast majority of appeals (92%) involved users asking to have their accounts or content restored compared to a far smaller share (8%) who appealed to have certain content removed.

“By publicly making these recommendations, and publicly monitoring Meta’s responses and implementation, we have opened a space for transparent dialogue with the company that did not previously exist,” the Oversight Board said.

Oversight Board overturned Meta’s decision in 75% of case decisions

Despite receiving more than one million appeals last year, the Oversight Board only makes binding decisions in a small handful of high-profile cases. Of those 12 published decisions in 2022, the Oversight Board overturned Meta’s original content moderation decision 75% of the time, per its report. In another 32 cases up for consideration, Meta determined its own original decision was incorrect. 50 cases total for review might not sound like much compared to millions of appeals, but the Oversight Board says it tries to make up for that disparity by purposefully selecting cases that “raise underlying issues facing large numbers of users around the world.” In other words, those few cases should, in theory, address larger moderation issues pervading Meta’s social networks.

The nine content moderation decisions the Oversight Board overturned in 2022 ran the gamut in terms of subject material. In one case, the board rebuked a decision by Meta to remove posts from a Facebook user asking for advice on how to talk to a doctor about Adderall. Another more recent case saw the Oversight Board overturn Meta’s decision to remove a Facebook post that compared the Russian army in Ukraine to Nazis. The controversial post notably included a poem that called for the killing of fascists as well as an image of an apparent dead body. In these cases, Meta is required to honor the board’s decision and implement moderation changes within seven days of the ruling’s publication.

Aside from overturning moderation decisions, the Oversight Board also spends much of its time involved in the less flashy but potentially just as important role of issuing policy recommendations. These recommendations can shift the way Meta interprets and enforces content moderation actions for its billions of users. In 2022, the Oversight Board issued 91 policy recommendations. Many of those, in one way or another, called on Meta to increase transparency when it comes to informing users why their content was removed. Too often, the Oversight Board notes in the report, users are “left guessing” why they had certain content removed.

In response to those transparency recommendations, Meta has reportedly adopted new messaging telling people which specific policies they violated if the violations involve hate speech, dangerous organizations, bullying, or harassment. Meta will also tell users whether the decision to remove their content is made by a human or an automated system. The company changed its strike system to address complaints from users that they were unfairly having their accounts locked in Facebook Jail.

Meta also made changes to the ways it handles crises and conflicts due to Oversight Board pressure. The company developed a new Crisis Policy Protocol which it used to gauge the possible risk of harm associated with reinstating former President Donald Trump’s account. While some speech advocates like the ACLU praised Meta’s decision to reinstate Trump, other rights groups like the Global Project Against Hate and Extremism and Media Matters said it was premature. Similarly, Meta also reviewed its policies around dangerous individuals and organizations to be more risk-based. Now, groups or individuals deemed the most dangerous are prioritized for enforcement actions on the platform.

[ad_2]

Source link