[ad_1]

Google CEO Sundar Pichai is doing damage control. After a disastrous debut, Google’s Bard AI is available to testers. However, the company’s chatbot has been roundly criticized for its inability to keep up with OpenAI’s ChatGPT and the ChatGPT-infused Microsoft Bing. Pichai appeared on the most recent New York Times Hark Fork podcast, promising upgrades to Bard in the next week.

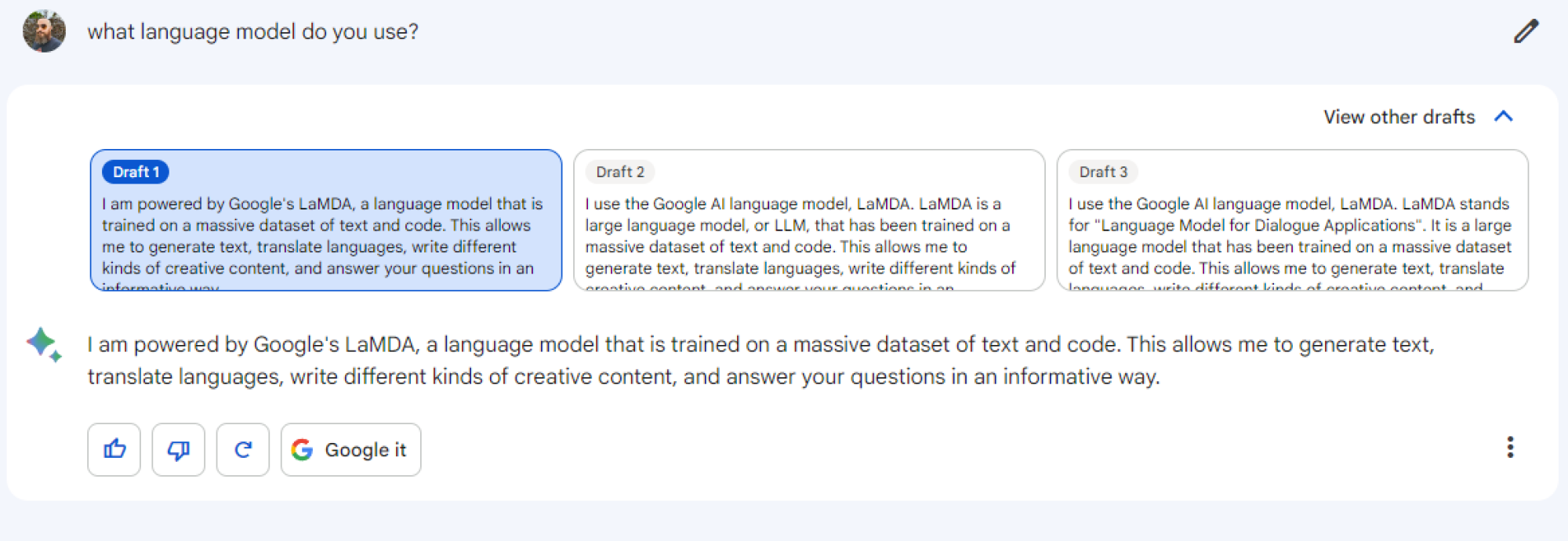

When Google rushed Bard out the door earlier this year, it was running a lightweight version of Google’s LaMDA language model. LaMDA is good at generating dialog, but it’s not as capable as ChatGPT and not designed for math or coding. Pichai says that Google is working to move Bard to its newer and more powerful PaLM models, and that could happen in as little as a week. On the podcast, Pichai compared the LaMDA-based Bard to a “souped-up Civic… in a race with more powerful cars.”

Pichai claims the decision to go with the more limited LaMDA model at launch is about caution. Google didn’t want to implement a machine-learning model that was too powerful for the application. He also touched on a recent open letter signed by Elon Musk and others that urges a six-month pause in AI development. He said the instinct to be concerned about AI has merit but thinks that current privacy and healthcare regulations are sufficient to keep AI in check.

Credit: Google

Google is playing catchup with OpenAI, which Pichai points out has a lot of former Googlers on staff. Google invented the transformer machine learning model that underlies ChatGPT, producing a study in 2017 and failing to leverage that technology in its products. Pichai notes it was a “pleasant surprise” to see the overwhelmingly positive user response to ChatGPT. He does not, however, subscribe to the idea that rolling out powerful AI chatbots is reckless.

Pichai also addressed some of the more fanciful predictions that we are barreling toward the creation of artificial general intelligence (AGI). Such a system would be sentient by many definitions, capable of human-like activities across various tasks. An AGI would change the information economy but could also make misinformation much harder to spot. “Can we have an AI system which can cost disinformation at scale? Yes. Is it AGI? It really doesn’t matter,” said Pichai. He suggests that humanity will be able to anticipate such a change and “evolve” to meet the challenge.

You can listen to the full podcast on the New York Times site.

[ad_2]

Source link