[ad_1]

Microsoft

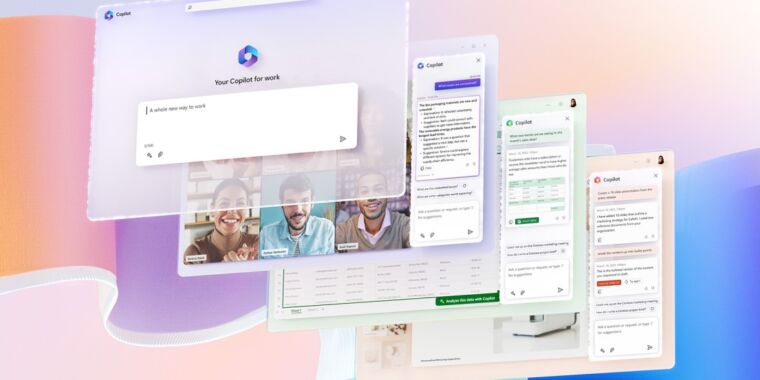

Today Microsoft took the wraps off of Microsoft 365 Copilot, its rumored effort to build automated AI-powered content-generation features into all of the Microsoft 365 apps.

The capabilities Microsoft demonstrated make Copilot seem like a juiced-up version of Clippy, the oft-parodied and arguably beloved assistant from older versions of Microsoft Office. Copilot can automatically generate Outlook emails, Word documents, and PowerPoint decks, can automate data analysis in Excel, and can pull relevant points from the transcript of a Microsoft Teams meeting, among other features.

Microsoft is currently testing Copilot “with 20 customers, including eight in Fortune 500 enterprises.” The preview will be expanded to other organizations “in the coming months,” but the company didn’t mention when individual Microsoft 365 subscribers would be able to use the features. The company will “share more on pricing and licensing soon,” suggesting the feature may be a paid add-on in addition to the cost of a Microsoft 365 subscription.

Demonstrating Copilot’s capabilities in Outlook.

Microsoft

In a video demonstrating Copilot features, Microsoft presenters showed Copilot generating emails and PowerPoint slides based on prompts. The core functionality is based on a large-language model (LLM) like the ChatGPT 4-based model used for Bing Chat, helped along by Microsoft Graph-supplied contextual information from elsewhere in Microsoft’s cloud. Microsoft says that Copilot’s LLM can be trained on data specific to an individual business, using your data “in a secure, compliant, privacy-preserving way” to make Copilot’s output more relevant.

Copilot was shown pulling in relevant images from OneDrive, inserting information from confirmation emails and calendar appointments from Outlook, and generating PowerPoint decks based on the information in a Word document. Copilot can also automate repetitive tasks, like adding animations and transitions to a PowerPoint slide show or fleshing out rough notes into a more polished document for public consumption.

Aware that AI content generators are prone to factual errors and other weird mistakes (often called “hallucinations”), Microsoft emphasized that Copilot is most useful for “first drafts” and “starting points.” It might not get every single fact in an email or presentation right, but users will be able to go through and tweak text, images, and formatting to make sure everything is correct. Copilot can also be used during the edit process, making points you’ve written more concise or automatically replacing an image in a PowerPoint deck with another more-relevant image.

“Sometimes, Copilot will get it right,” said Microsoft VP of Modern Work and Business Applications Jared Spataro in the presentation. “Other times, it will be usefully wrong, giving you an idea that’s not perfect, but still gives you a head start.”

Microsoft also stressed its commitment to “building responsibly.” Despite allegedly laying off an entire team dedicated to AI ethics, Microsoft says it has “a multidisciplinary team of researchers, engineers and policy experts” looking for and mitigating “potential harms” by “refining training data, filtering to limit harmful content, query- and result-blocking sensitive topics, and applying Microsoft technologies like InterpretML and Fairlearn to help detect and correct data bias.” The system will also link to its sources and note limitations where appropriate.

Microsoft has been pushing AI-powered features in all of its biggest products this year, most notably in the Bing Chat preview, but also in Skype and Windows 11. It’s part of a multi-billion-dollar partnership with OpenAI, the company behind the ChatGPT chatbot, the Whisper transcription technology, and the DALL-E image generator.

[ad_2]

Source link