[ad_1]

Stable Diffusion / OpenAI

On Tuesday, OpenAI announced a sizable update to its large language model API offerings (including GPT-4 and gpt-3.5-turbo), including a new function-calling capability, significant cost reductions, and a 16,000 token context window option for the gpt-3.5-turbo model.

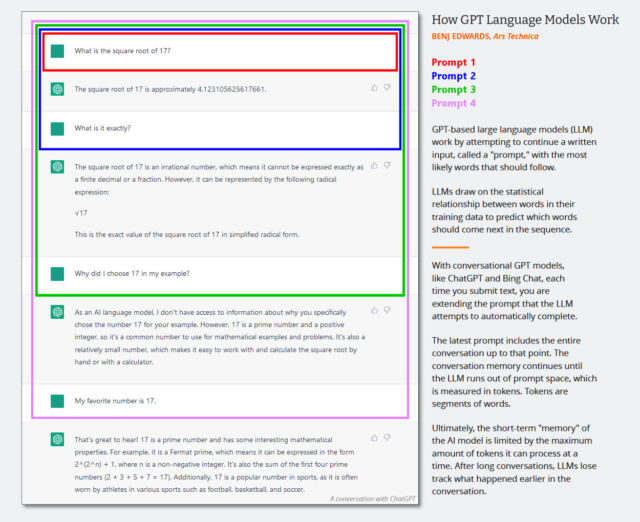

In large language models (LLMs), the “context window” is like a short-term memory that stores the contents of the prompt input or, in the case of a chatbot, the entire contents of the ongoing conversation. In language models, increasing context size has become a technological race, with Anthropic recently announcing a 75,000-token context window option for its Claude language model. In addition, OpenAI has developed a 32,000-token version of GPT-4, but it is not yet publicly available.

Along those lines, OpenAI just introduced a new 16,000 context window version of gpt-3.5-turbo, called, unsurprisingly, “gpt-3.5-turbo-16k,” which allows a prompt to be up to 16,000 tokens in length. With four times the context length of the standard 4,000 version, gpt-3.5-turbo-16k can process around 20 pages of text in a single request. This is a considerable boost for developers requiring the model to process and generate responses for larger chunks of text.

As covered in detail in the announcement post, OpenAI listed at least four other major new changes to its GPT APIs:

- Introduction of function-calling feature in the Chat Completions API

- Improved and “more steerable” versions of GPT-4 and gpt-3.5-turbo

- A 75 percent price cut on the “ada” embeddings model

- A 25 percent price reduction on input tokens for gpt-3.5-turbo.

With function calling, developers can now more easily build chatbots capable of calling external tools, converting natural language into external API calls, or making database queries. For example, it can convert prompts such as, “Email Anya to see if she wants to get coffee next Friday” into a function call like, “send_email(to: string, body: string).” In particular, this feature will also allow for consistent JSON-formatted output, which API users previously had difficulty generating.

Regarding “steerability,” which is a fancy term for the process of getting the LLM to behave the way you want it to, OpenAI says its new “gpt-3.5-turbo-0613” model will include “more reliable steerability via the system message.” The system message in the API is a special directive prompt that tells the model how to behave, such as “You are Grimace. You only talk about milkshakes.”

In addition to the functional improvements, OpenAI is offering substantial cost reductions. Notably, the price of the popular gpt-3.5-turbo’s input tokens has been reduced by 25 percent. This means developers can now use this model for approximately $0.0015 per 1,000 input tokens and $0.002 per 1,000 output tokens, equating to roughly 700 pages per dollar. The gpt-3.5-turbo-16k model is priced at $0.003 per 1,000 input tokens and $0.004 per 1,000 output tokens.

Benj Edwards / Ars Technica

Further, OpenAI is offering a massive 75 percent cost reduction for its “text-embedding-ada-002” embeddings model, which is more esoteric in use than its conversational brethren. An embeddings model is like a translator for computers, turning words and concepts into a numerical language that machines can understand, which is important for tasks like searching text and suggesting relevant content.

Since OpenAI keeps updating its models, the old ones won’t be around forever. Today, the company also announced it is beginning the deprecation process for some earlier versions of these models, including gpt-3.5-turbo-0301 and gpt-4-0314. The company says that developers can continue to use these models until September 13, after which the older models will no longer be accessible.

It’s worth noting that OpenAI’s GPT-4 API is still locked behind a waitlist and yet widely available.

[ad_2]

Source link